Key Findings

Examples of some of our recent findings

Why do women live so long after reproductive cessation?

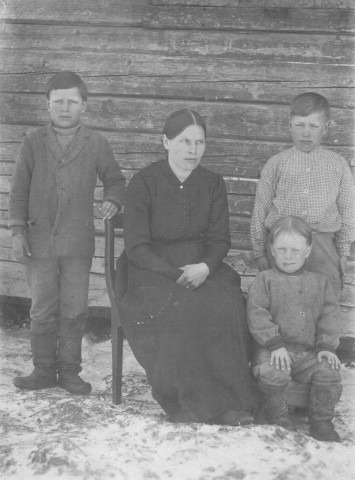

Extended post-reproductive life is rare in animals. Humans are one of only a handful of species to exhibit this unusual life history trait, but its evolution is still an unsolved question of biology. In these studies we use theories developed for understanding family living and cooperative breeding in birds and mammals and examine whether it can be used to understand the evolution and dissolution of family-living and cooperative breeding in humans.

First, what are the ecological factors that promote family living?

Second, does the presence of potential helpers (pre-reproductive offspring and post-reproductive grandparents) influence lifetime reproductive success and overall fitness of breeders?

Thus far, we have shown that women surviving long after menopause had more grandchildren than those dying earlier (18th & 19th century Finland and Canada). We have found that the longer a woman lived after the end of her reproductive years, the more successfully her children’s reproductive lives would be. These children tended to begin their families earlier, have a shorter gap between children, have a longer reproductive life, and produce offspring that were more likely to survive into adulthood if their mother was alive to help them. In evolutionary terms this gives a benefit as it makes it more likely women who survive long after stopping reproduction will forward more genes to the next generation. However, grandmothers are not always beneficial - older and weaker paternal grandmothers are detrimental to grandchild survival, whilst old maternal grandmothers no longer positively influence grandchild survival.

Do family members cooperate or compete?

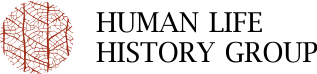

Studying the evolution of cooperative breeding and group living requires simultaneous quantification of both helping benefits and competitive costs within groups. Effects of cooperation and competition may have promoted the evolution of birth scheduling, dispersal patterns and life-history traits including menopause that avoid resource competition. Human groups consist of cooperative individuals of varying relatedness, predicted to lead to conflict when resources are limited and relatedness low. We have used our large demographic historical data sets to investigate conflicts between different family members so far in three different ways.

First, our data have clearly shown that grandmothers increased fitness of their offspring by helping them to reproduce and enhancing survival of their grandoffspring. However, our research has also revealed that when daughters-in-law reproduced alongside the mother-in-law, the offspring survival of both of these women suffered. Simultaneous reproduction of daughters and mothers did not have adverse effects for their offspring. This result agrees with the kin selection theory, since women who were not related competed more compared to women who were closely related. Such conflict of two generations of women who are not related is predicted to have lead to the evolution of menopause in humans. In patrilocal societies women move to their mate's residence and are thus not related to the other women in the group, potentially leading to the younger woman to win the evolutionary conflict over reproduction.

Second, we have found similar reproductive conflict also among unrelated women in Karelian joint families where brothers join household with their families. Simultaneous reproduction of co-resident women (wives of the different brothers) had adverse effect on their offspring survival. Third, we have investigated whether the same family member could be beneficial or competitor depending on the life stage. We have found that the presence of elder siblings improved the chances of younger siblings surviving to sexual maturity, suggesting that despite a competition for parental resources, they may help rearing their younger siblings. After reaching sexual maturity however, same-sex elder siblings' presence was associated with reduced reproductive success, indicating the existence of competition among same-sex siblings.

A quantitative genetic perspective on human life history evolution

We apply a quantitative genetic framework to a species which is traditionally not studied from an evolutionary perspective – humans. The central focus of human behavioural ecology has recently begun to shift from asking how the behaviour of modern humans reflects the history of our species, to looking at natural selection and finally to measuring current selection in contemporary populations. We study humans from an evolutionary perspective, and explore the possibilities and limitations of an evolutionary approach to human behaviour, life-history evolution and the interplay between the sexes.

Thus, we study the genetic architecture underlying key life history traits in a human population, with a special focus on intralocus sexual conflict on the genetic level and the evolution of genetic variances and covariances (the G-matrix) over time and across contexts. The idea that a trait can be beneficial in one sex but detrimental in the other, resulting in a sexual conflict and hence different selection pressures in the two sexes, has received tremendous attention in the field of evolutionary biology, but we know little about the genetic basis of sexual conflict in humans. We used an extensive genealogical dataset to show that the phenotypic selection gradients differed between the sexes regarding age at first and last reproduction, reproductive lifespan and reproductive rate in this pre-industrial Finnish population. Further, all traits were significantly heritable in both sexes. However, the genetic correlations with fitness were almost identical in men and women and intersexual genetic correlations were generally high, thus constraining the evolution of further sexual dimorphism to resolve the conflict.

In a second study, looked at how the genetic variances and covariances (the G-matrix) between key life-history traits changed over time as the population went through extensive environmental and societal changes over the past 300 years. We found that the G matrices remained largely stable but showed a trend for an increased additive genetic variance and therefore evolutionary potential of the population after the transition. Thus, predictions of evolutionary change in human populations are possible even after the recent dramatic environmental change. This facilitate predictions of how our biology interacts with changing environments, with implications for global public health and demography.

Natural selection may take things in new and unexpected directions

We examined how the strength of natural selection changed across a period of reduction in mortality and fertility in two villages in Gambia. We used data on births and deaths to examine whether and how height and body mass might affect an individual’s ability to thrive and multiply (i.e. whether these traits were under selection) for every year over a period of more than 50 years. We chose to look at selection on these traits because they are known to influence survival and fertility. We found a far more complex picture than we anticipated - selection on body mass became more negative, and selection on height more positive.

What does this mean? Well, assuming such trends would be sustained over several generations (and also that migration was kept to a minimum, and that these traits had a heritable basis), we might expect that this could eventually lead to people becoming taller and leaner. Quite why, our data couldn’t tell us. But our results weren’t interesting to us so much for what they said about what could happen in those two specific Gambian villages in the future, but more for their broader implications. The findings highlight that even if the total opportunity for natural selection to occur may be reduced in low fertility and low mortality populations, the selection that does occur may take things in new and unexpected directions.

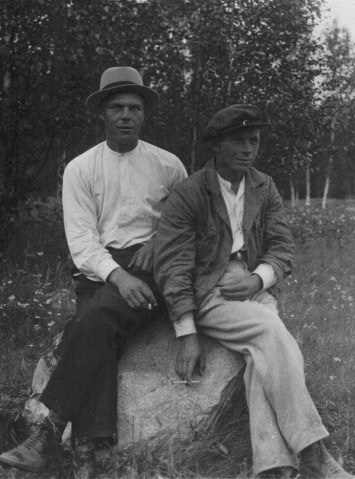

Natural and sexual selection in a monogamous historical human populations

Despite advancements in medicine and technology, as well as an increased prevalence of monogamy, our research revealed that humans have continued to evolve after the agriculture revolution, just like it did for any other species. It is a common misunderstanding to think that human evolution only took place a long time ago, and that to understand ourselves we must look back to the hunter-gatherer days of humans. Using church records of about 6,000 Finnish people born between 1760-1849, we could show that humans continued to be affected by both natural and sexual selection after the demographic, cultural and technological changes of the agricultural revolution. This was true although we know that the specific pressures, the factors making some individuals able to survive better, or have better success at finding partners and produce more kids, have changed across time. As for most animal species, we also found that men and women are not equal concerning Darwinian selection: characteristics increasing the mating success of men are likely to evolve faster than those increasing the mating success of women. This is because mating with more partners was shown to increase reproductive success more in men than in women. Surprisingly, however, selection affected wealthy and poor people in the society to the same extent.

How old church records are helping us to assess the impact of childhood disease and why we’re living longer?

The Great Exhibition of 1851, housed in London’s Crystal Palace, showcased the newest of culture and science – including the world’s largest diamond, a precursor to the fax machine and barometer which worked entirely through leeches. Living conditions were tough, but having survived to the age of 20, a young Londoner attending the exhibition could expect to live until around 60. A century and a half later, 20-year-old Londoners watching the Olympics down the pub can expect to live to the age of 80.

But what is behind this extraordinary 50% increase in human lifespan since the 1850s? Many factors are at play, including the development of contraception, vaccination, antibiotics and improved nutrition and hygiene. But the impact of each factor has remained a bit of a mystery. This is a shame, as understanding the precise nature of forces behind lifespan is essential if we want to be able to predict how long we may live in the future. To shed some light on this, we investigated church records from Finnish individuals born between 1751 and 1850, just before this huge extension in lifespan, to work out how childhood diseases may affect a population’s lifespan.

As long ago as 1934, a study reported that a person’s chances of dying were determined not by the current year, but by the year in which they were born. The authors of this early study concluded that “the figures behave as if the expectation of life was determined by the conditions which existed during the child’s earlier years”. So what might these conditions be?

In 2004 a new paper put forward a compelling hypothesis to explain the link between early-life conditions and adult mortality. Infectious diseases are caused by bacteria and viruses, which elicit inflammatory immune responses. Many, or severe, infections can lead to chronic inflammation, which is linked to atherosclerosis, a hardening of the arteries, and thrombosis, coagulation of the blood, which are risk factors for cardiovascular disease and stroke. Since 1850, serious childhood infections such as smallpox and whooping cough have largely been eradicated by vaccination and hygiene in industrialised countries.

A decline in serious childhood infections could therefore explain why lifespan has increased: fewer chronic infections, lower inflammation, and less atherosclerosis could have lead to a later onset of cardiovascular disease and therefore longer lifespan.

There is evidence that chronic infections in early life can have a long-lasting impact on inflammation. Union Army soldiers who suffered tuberculosis as young adults during the American Civil War had a 20% increase in risk of cardiovascular disease in middle age. Meanwhile, Tsimane forager farmers in the Bolivian Amazon who live with endemic parasite infections from early life have far higher levels of inflammation than people in the modern US. Despite this link, higher inflammation in the Tsimane does not lead to cardiovascular disease: they are protected by their excellent diet and active lifestyle. This suggests that such diseases are a result of modern lifestyles and may have been rare during human history.

But this is not the whole story. Intriguingly, people in the Ecuadorian Amazon, who also live with endemic infections, seem to have lower levels of inflammation than people in the modern US. This suggests that the experience of infections in early life may alter inflammatory responses in later life and prevent overzealous inflammation.

There is abundant evidence that high death rates in early life are linked to higher mortality in later life. Most such studies use data from pre-industrial populations, because an unfortunate drawback of studying modern people is that they tend to outlive the researchers. In these populations people born in years where the infant mortality rate is higher are consistently shown to have a higher mortality rate as adults.

There is, however, one issue with these findings. Infant mortality has decreased over time. So has adult lifespan. We assume a causal relationship between the two, but the link could be caused by something else, related to both of these, also changing across time.

For example, general improvements in living conditions could drive down infant mortality, through reduced infections, and increase adult lifespan, through improved diet. The link between reduced infant mortality and longer adult lifespan could therefore arise because of improvements in living conditions. To deal with this problem, a statistical technique called “de-trending” is used, so that the data provide information on infant mortality relative to the prevailing conditions.

Lessons from pre-industrial Finland

Using this technique, recent studies have found negligible associations between infant mortality rate and later-life mortality risk. Something missing from these studies, however, is data on disease in early life: infant mortality rate is used as a proxy, but infant death could be related to other factors.

Recently, we used data from church records on death rates from infections in pre-industrial Finnish people. These records contain information about births, marriage, and death for thousands of people. From the death data, we could determine, in every year, what percentage of the children died from an infection. We assumed that lots of deaths meant lots of the surviving children were infected.

We used the “de-trending technique” to remove the change in child disease across time and studied how child infection exposure during the first five years of life influenced later life survival, cause of death, and fertility. The results were very clear: we found no link between infant disease exposure and later-life mortality, death from cardiovascular disease, or later-life fertility. Indeed, the evidence seems to be mounting that the link between early disease and adult lifespan is relatively weak. Similarly, the evidence that people in populations who experience frequent infections can moderate their inflammatory responses also weakens the link between infection and chronic inflammation.

Without a doubt, the fact that modern Londoners generally get enough food, have clean drinking water and access to free medical care will extend their lifespan. Current evidence suggests that these processes acting in adulthood seem to be the dominant factor.

All of this raises the inevitable question: at what point will human lifespan stop increasing? Certainly, every time a prediction is published, it is quickly exceeded. Many researchers agree that as long as living conditions continue to improve, average lifespan could increase for a little while longer, perhaps to the point at which it reaches 100 years. Research into life-extending treatments is exciting both scientifically and commercially, but given we don’t know what may be possible it is hard to predict the limits of human lifespan into the future. But that’s a whole other debate.

We used the “de-trending technique” to remove the change in child disease across time and studied how child infection exposure during the first five years of life influenced later life survival, cause of death, and fertility. The results were very clear: we found no link between infant disease exposure and later-life mortality, death from cardiovascular disease, or later-life fertility. Indeed, the evidence seems to be mounting that the link between early disease and adult lifespan is relatively weak. Similarly, the evidence that people in populations who experience frequent infections can moderate their inflammatory responses also weakens the link between infection and chronic inflammation.

Without a doubt, the fact that modern Londoners generally get enough food, have clean drinking water and access to free medical care will extend their lifespan. Current evidence suggests that these processes acting in adulthood seem to be the dominant factor.

All of this raises the inevitable question: at what point will human lifespan stop increasing? Certainly, every time a prediction is published, it is quickly exceeded. Many researchers agree that as long as living conditions continue to improve, average lifespan could increase for a little while longer, perhaps to the point at which it reaches 100 years. Research into life-extending treatments is exciting both scientifically and commercially, but given we don’t know what may be possible it is hard to predict the limits of human lifespan into the future. But that’s a whole other debate.

How early-life nutrition influences later-life survival and fertility

The conditions experienced by organisms during early life can have a profound effect on development and performance in later life. For example, maternal smoking can affect the health of children. Although the foetus is protected to a certain extent from the harsh world outside, maternal physiology still provides signals about the conditions into which an individual will be born. Perhaps the most important of these is nutrition, since the key life-history decisions made by an individual, such as whether to invest in reproduction or self-maintenance, are made in the context of limited resources which much be allocated among traits to maximise fitness. Changes which occur in the foetus in response to maternal nutrition have been implicated in the evolution of diabetes.

A foetus experiencing low maternal nutrition can tailor its development to expect those conditions in later life: for example, it can hold onto fat and break down blood glucose slowly. If, however, the foetus experiences high nutrition in later life, the result will be an obese individual with high blood glucose: in other words, someone with a high risk of type 2 diabetes. Therefore, we should find that in low nutrition conditions, individuals who developed under low nutrition should be healthier than those who developed under high nutrition, because low-nutrition individuals would use their limited energy efficiently, while high-nutrition individuals will burn through it quickly. Meanwhile, under high nutrition conditions, individuals who developed under low nutrition conditions should be less healthy, because they will hold onto and store too much fat and glucose, while high-nutrition individuals will be able to burn through it and stay healthy.

A similar idea can explain how this scenario could have evolved: under low nutrition, individuals who developed under low nutrition will use their resources more effectively and have higher evolutionary fitness (survival and reproductive success), while those who developed under high nutrition burn through resources and so have low fitness. Under high nutrition, individuals who developed under low nutrition hold onto it instead of turning it into fitness, while individuals who developed under high nutrition use the resources effectively and have high fitness. The ‘predictive adaptive response’ (PAR) hypothesis predicts that these fitness benefits occur in adulthood. The PAR hypothesis therefore predicts that, under low nutrition conditions in adulthood, individuals who developed under low nutrition should have higher fitness than those who developed under high nutrition, and that the opposite should be true under high nutrition conditions in adult. We tested this hypothesis using data collected from the Finnish church records.

Data on crop yields give us a measure of how much food was likely to be available to an individual. This assumption seems to have been validated by a study in which we found that years where crop yields were low were linked to higher mortality, especially in the poorest social class and the oldest individuals (Hayward et al. 2012). In two more recent studies, we tested the prediction that individuals experiencing low-nutrition or poor-quality conditions in later life should have higher fitness if they also experienced poor conditions in early life. In one study, we tested the probability of surviving and reproducing during a severe famine in the 1860s (Hayward et al. 2013). Contrary to the PAR hypothesis, however, we found that survival and reproductive success during the famine were lower in people who experienced low crop yields in early life, compared to people who experienced high crop yields in early life. This suggests that, even in the most adverse low-nutrition conditions, individuals who developed under low-nutrition conditions had lower fitness than individuals that developed under high nutrition conditions. In a second study, we found similar results, this time using child mortality rate as a measure of the quality of conditions in a given year (Hayward and Lummaa 2013): individuals who experienced poor conditions in early life always experienced lower fitness in later life, irrespective of later-life conditions.

Overall, the results suggest that long-term predictive adaptive responses are likely to be rare in humans. Since the strength of selection is strongest in early life, it is more likely that the fitness benefits of adaptive development in response to low nutrition are felt in early life, for example allowing the foetus to survive to parturition, or through the early stages of neonatal life.

How poor early conditions affect ability to cope with hardship later in life?

Adam Hayward's personal website: Fitter. Happier. Fewer early-life infections?

A person lucky enough to survive to the age of 20 in 1840s London could expect to live for a further 40 years, to the age of 60. In 2011, a 20-year-old Londoner would expect to live for a further 60 years, to the age of 80. That’s a 50% increase in remaining lifespan, in a little over 150 years. How has this happened?

In 19th century Europe, around 40% of children died of infectious diseases like smallpox, whooping cough and measles before they reached adulthood. However, it’s important to recognize that both of our 20-year olds survived to age 20, so the difference in their life expectancies has nothing to do with the fact that children in modern London are unlikely to catch dangerous infections. OR DOES IT?

Looking at patterns of mortality across time throws up some interesting results. For example, a study of 19th and 20th century populations in Britain and Sweden showed that the death rates of individuals were more closely related to their year of birth than to the year in which their mortality was assessed.

Mortality rates per thousand individuals in England and Wales, 1845-1925. The diagonals show data for the same cohort of individuals followed across their lives, and it’s apparent that the mortality rate remains similar as the cohort ages. The best predictor of mortality is birth year, not calendar year. For example, the cohort born aged 10 in 1855 is the same group who are aged 60 in 1905 and their mortality rates are identical. Meanwhile, the cohort aged 10 in 1905 has a much lower mortality rate.

The authors concluded that:

The figures behave as if the expectation of life was determined by the conditions which existed during the child’s earlier years…the health of the child is determined by the environmental conditions existing during the years 0-15, and the health of the [adult] is determined preponderantly by the physical constitution which the child has built up.

So what might these ‘conditions’ be? An influential paper published in 2004 suggested that the link between early-life conditions and later-life mortality might be due to infections experienced in childhood. Infections elicit inflammatory immune responses, which may persist at a chronically high level. Chronic inflammation is linked to risk of heart disease, stroke and cancer in later life. The ‘cohort morbidity phenotype’ hypothesis suggests that since childhood infections have become increasingly rare, so have chronic inflammation, their associated pathologies and early death. Thus, we live longer.

A simplified version of the ‘cohort morbidity phenotype’ hypothesis. Infections cause inflammatory responses, which lead to atherosclerosis (thickening of artery walls) and thrombosis (clotting), which are linked to heart disease, stroke and mortality. The full version includes a couple of added nuances!

This is an exciting (and controversial) idea, so we tested it, in a new paper published in Proceedings of the National Academy of Sciences of the USA. The data we used came from, as I usually can’t help blurting out when meeting people and explaining what I do, ‘some dead Finnish people’. We had data on births, marriages and deaths from church records for over 7,000 individuals, born between 1751 and 1850, in seven different populations across Finland.

OK, I admit it: a number of previous studies have tested for links between early and later mortality. But…. these studies have looked at how many children born in a given year survived infancy (the ‘cohort mortality rate’), and then correlated that with the survival rate of these individuals in later life. Instead of using data on child deaths from all causes, we used data on child deaths from infections.

For each year of our study, and in each parish, we knew how many children were alive, and how many of those children died of an infectious disease. Our measure of disease exposure for a given birth year was the number of children who died of infectious diseases, divided by the number of children alive. We calculated this measure of disease exposure for each of the first five years of a child’s life. We then went on to use statistical models to determine the association between early disease exposure and:

- Mortality risk in adulthood

- Risk of mortality from cardiovascular disease, stroke and cancer

- Reproductive success

We predicted that our measure of early disease exposure should be linked with higher mortality risk, a greater risk of mortality from cardiovascular disease, stroke and cancers, and lower reproductive performance. And we found…

Nothing. There was no link between early disease exposure and adult (after age 15) mortality risk. We did find the expected differences between social classes, with wealthy farm owners and merchants surviving better than poor crofters and labourers, and between the sexes, with women surviving better than men. However, higher disease exposure was predicted to increase mortality risk by a piddling, and statistically insignificant, 2%.

We also found no association between early disease exposure and deaths specifically from heart disease, stroke and cancer. The (nowhere near statistically significant) trend was for a lower probability of death from these causes with increasing early disease exposure. Men were more likely to die from these causes, but there was no difference between the social classes.

Lastly, we found that reproductive success was not affected by early-life disease exposure. To take any survival effect out of the equation (not essential, so it turned out…), we only analysed people who survived to age 50, and who therefore had almost certainly reached the end of their reproductive lives. Early disease exposure was not linked to age at first birth, lifetime children born, child survival rate, or lifetime children surviving to adulthood. Excellent.

Normally at this point, there’d be loads of cool graphs and stuff…but we didn’t make any. Instead, take a look at the paper and the enormous supplement for details on the results! Before the headline conclusions….some caveats:

We have no idea who was exposed to disease. The only records of infections were where someone died and the cause was recorded in the church register as being of an infectious disease. This meant we had to assume that, in years when lots of children died of infections, our study individuals were (on average) more likely to get disease. But at worst, it’s possible that none of our study individuals ever got sick as kids.

What doesn’t kill you makes you stronger. It’s possible that individuals who were exposed to disease responded in two ways. They could have been weakened by disease and so died earlier, or they could have been the crème de la crème: robust enough to survive and be awesome at everything. A balance between damaged individuals and robust survivors would lead to…no net effect. So we may have actually found TWO cool effects…but without any evidence for them.

But…we did do some good things. We used a measure of disease exposure based on death from infectious disease, rather than deaths of all causes; we tested for effects on specific causes of death; we found the same patterns in seven parishes across Finland; we looked at effects on reproduction. We also did some cool (and relatively straightforward!) stats to remove temporal trends…but this isn’t the place for that.

Overall, we found absolutely bugger all evidence for a link between early-life disease exposure and mortality risk, cause of death, or reproductive success in later life. The results challenge the idea that extended lifespan in modern populations is due to reduced childhood disease exposure, but they certainly do not disprove it. It does seem however, that in common with a few other recent studies, the early-life environment may have weaker effects on events occurring in adulthood than do the conditions experienced during adult life.

If you’re interested in the paper, but can’t access it, please do get in touch.